3D Object Manipulation Using Speech and Hand Gesture

DOI:

https://doi.org/10.37934/arca.31.1.112Keywords:

Augmented Reality, 3D object manipulation, gesture interaction, natural language processingAbstract

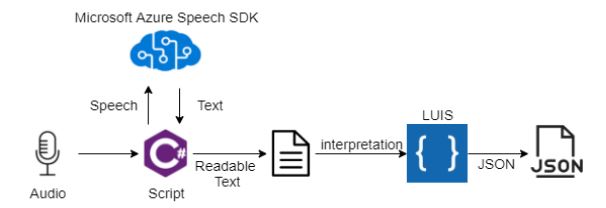

Natural user interface (NUI) is an interface that enables users to interact with the digital world in the same way they interact with the physical world through sensory input such as touch, speech, and gesture. The combination of multiple modalities for NUI has become the trend in user interface recently. There is significant progress in advancing speech and hand recognition technology, which makes both become effective input modalities in HCI. However, there are limitations exist that degrade the performance which includes the complexity of vocabulary and unnatural hand gestures to instruct the machine. Therefore, this project aims to develop an application with natural gesture and speech input for 3D object manipulation. Three phases have been carried out, first is data collection and analysis, second is application structure design, and the third is the implementation of speech and gesture in 3D object manipulation. This application is developed by using Leap Motion Controller for hand gesture tracking, and Microsoft Azure Speech Cognitive Service and Microsoft Azure Language Understanding Intelligence Service for natural language speech recognition. The evaluation has been performed based on the accuracy of the command recognition and usability and user acceptance. The results show that the approaches developed in this project able to make good recognition of the speech command and gesture interaction while user experience testing shows high level of satisfaction in the application.

Downloads