A Hybrid Model for Human Action Recognition Based on Local Semantic Features

DOI:

https://doi.org/10.37934/arca.33.1.721Keywords:

Illumination, human action recognition, Encoder-Decoder, iSIFTAbstract

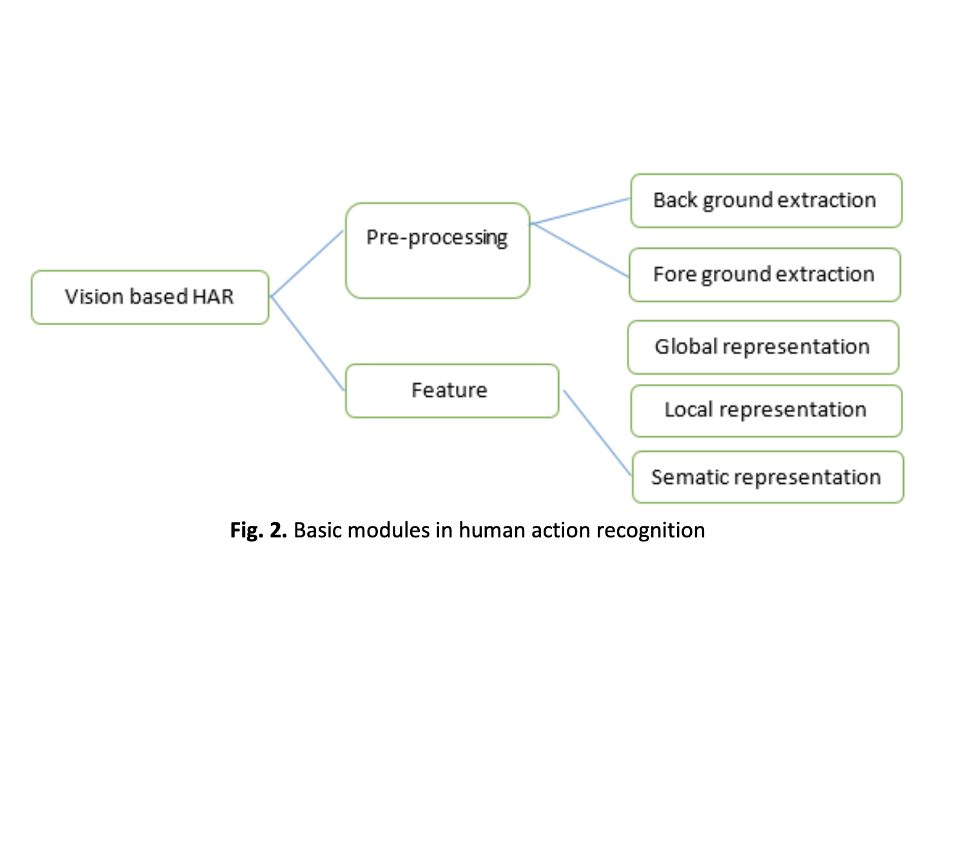

One of the challenging research in real-time applications like video surveillance, automated surveillance, real-time tracking, and rescue missions is Human Action Recognition (HAR). In HAR complex background of video, illumination, and variations of human actions are domain-challenging issues. Any research can address these issues then only it has a reputation. This domain is complicated by camera settings, viewpoints, and inter-class similarities. Uncontrolled environment challenges have reduced many well-designed models' performance. This paper aims to design an automated human action recognition system that overcomes these issues. Redundant features and excessive computing time for training and prediction are also issues. We propose hybrid model having four modules: the first one is Encoder-Decoder Network (EDNet) need to extract deep features. The second one is an Improved Scale-Invariant Feature Transform (iSIFT) needs to reduce feature redundancy. The third one is Quadratic Discriminant Analysis (QDA) algorithm to reduce feature redundancy. The fourth one is the Weighted Fusion strategy to merge properties of different essential features. The proposed technique is evaluated on two publicly available datasets, including KTH action dataset and UCF-101, and achieves average recognition accuracy of 94% and 90%, respectively.

Downloads